The fdmon (Fast Deployment Monitoring) cloud platform is built from a large set of servers (nodes) that provide resources to implement different functions : Analytics, Trending, Front-End, Storage, Publication, IOT Broker, …

Each function is supported by a number of node pairs and each node pair meets the following requirements :

- The nodes are in 2 separate regions, so as not to be exposed to the same risk linked to a region (natural, energetic, geopolitical, …)

- The nodes are hosted by 2 separate cloud providers, so as not to be exposed to the same risk linked to a provider (provider network routing between regions, provider global network failure, bankruptcy, human error, …)

- Nodes don’t run the same Linux distribution and kernel version, in order to avoid the same system bug on both nodes of the pair (possible since communication between nodes is based on API only).

Furthermore, we have implemented a clustering technology that supports high latencies by optimizing the volume of data that must be shared and compensating for latencies through massive parallelization of interactions between nodes and objects.

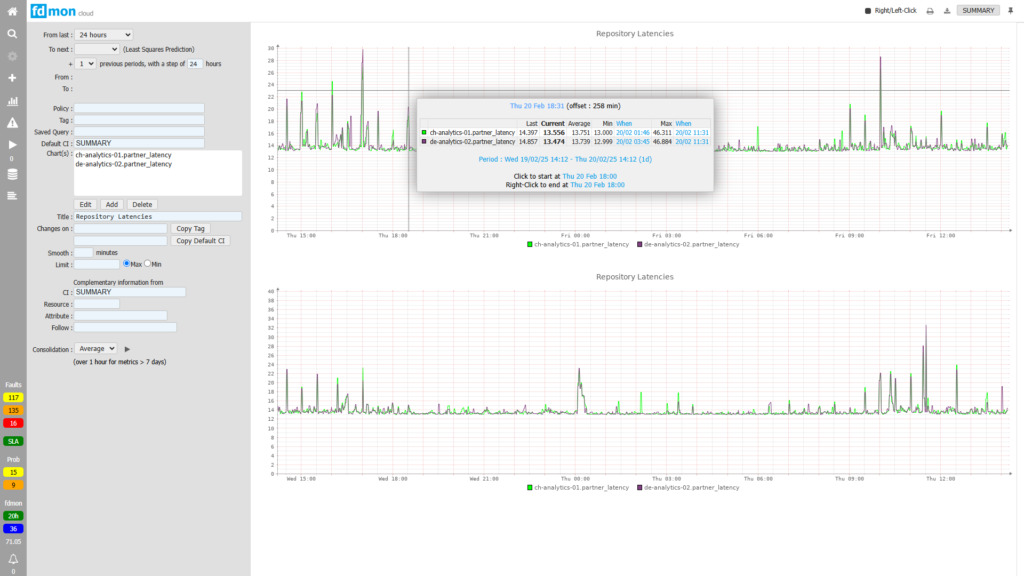

Each cloud provider combination has been pre-validated in terms of network latency and bandwidth. Below 50 ms latency, fdmon performances are optimal. Below 100 ms latency, performances are acceptable. Above 400 ms, user experience is impacted. Here are some examples of latencies :

- between Geneva and Frankfurt : around 15 ms

- between Geneva and London : around 16 ms

- between Geneva and New-York : around 85 ms

- between Geneva and Dubai : around 130 ms

- between Geneva and Singapore : around 150 ms

Latencies between storage nodes are constantly monitored and occasionally, we have to hot migrate nodes in order to optimize routes within node pairs, or after detecting a bottleneck (this was recently the case between OVH Germany and Infomaniak Switzerland, whose route went through SwissIX Internet Exchange Point). This constraint only applies to Storage nodes.

In case of failure of Front-End nodes, the DNS A records of the URL (specific to customers) pointing to them are immediately switched to the remaining nodes, but clients can use alternate URLs during the failover, or at any time. This only applies to the fdmon UI since the data traffic from fdmon Proxies (located in customers’ data centers) to the fdmon cloud platform relies on distributed IP addresses in active-active mode.

To be eligible for 100% availability, a customer must have at least 2 fdmon Proxies, ideally in separate data centers, but Proxies clustering is handled by the fdmon cloud platform (balancing and failover).

Despite the incidents we have experienced with our various providers, the fdmon cloud platform has not experienced any downtime for any of our customers since its creation in 2017.

Leave a Reply